Replication Crisis

Replicability of Research

Terminology

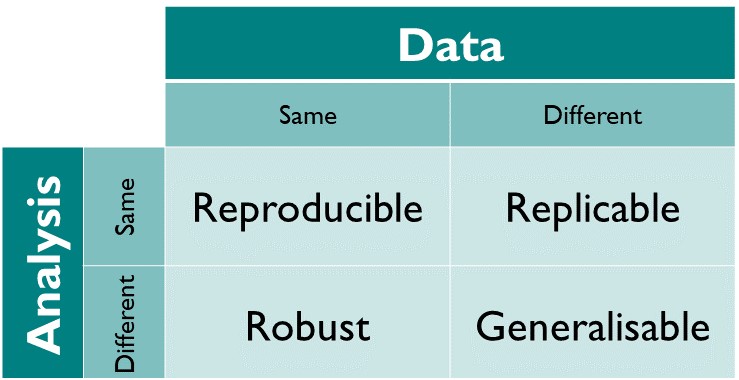

Terms like “reproducibility” and “replicability” differ between fields and researchers (Voelkl et al. 2025; Baker 2016; Nosek and Errington 2020). Here, I use the terminology as depicted in Figure 1 from The Turing Way Community (2021). Thus, a study is replicable if new data sets obtained from replication studies yield results compatible with the original study.

How much research is replicable?

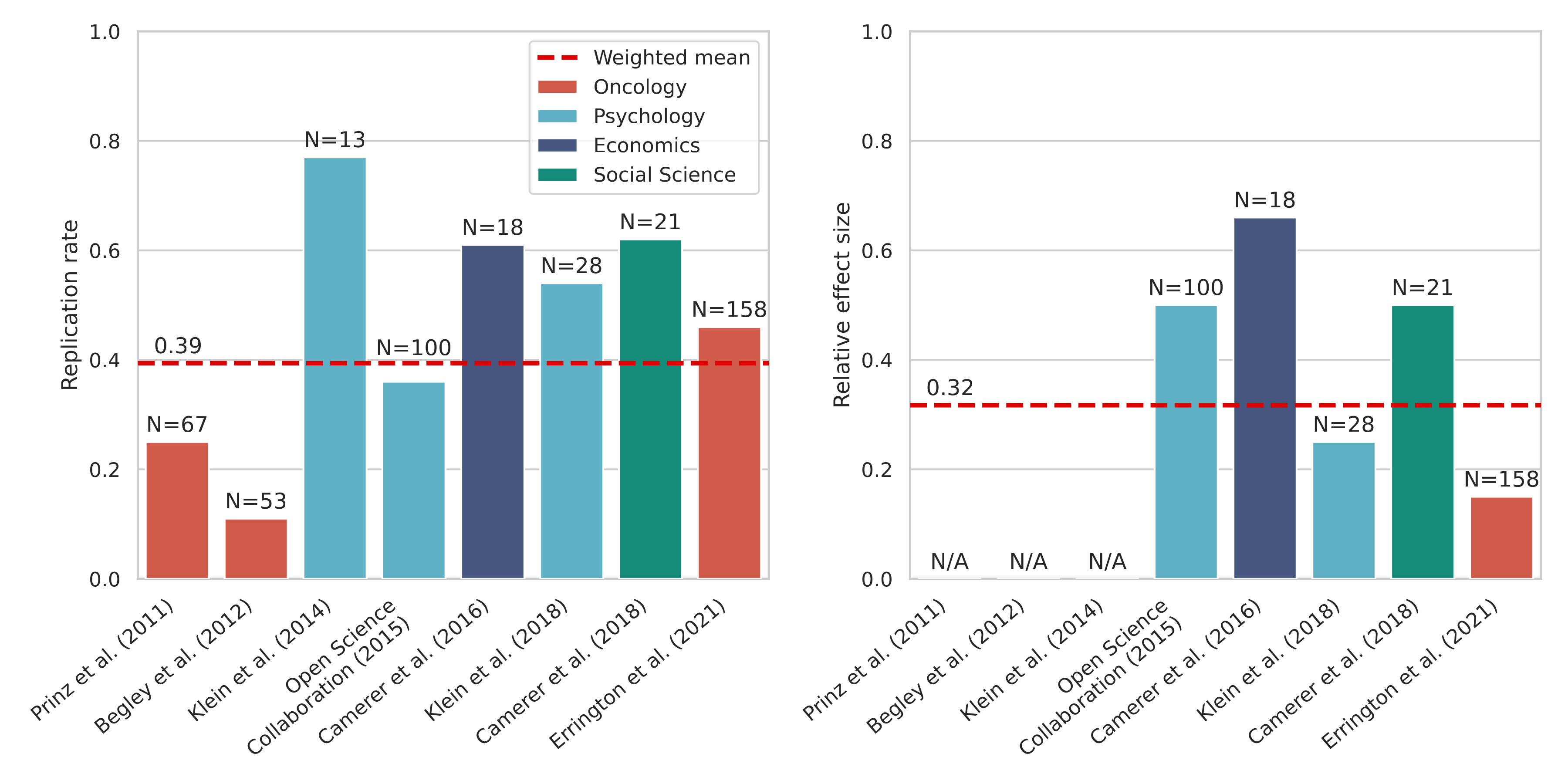

The left panel in Figure 2 shows the replication success rate of several large-scale replication studies, as determined by the authors’ criteria. It should be noted that replication success criteria are different between authors, and that different fields have unique challenges and are not directly comparable. Thus, this figure only serves as a high-level overview of replication success. The right panel shows the relative effect size (original effect size divided by replication effect size). Numbers smaller than 1 indicate that the replicated effect is smaller than the one reported in the original study.

Sources:

Prinz, Schlange, and Asadullah (2011); Begley and Ellis (2012); Klein et al. (2014); OPEN SCIENCE COLLABORATION (2015); Camerer et al. (2016); Klein et al. (2018); Camerer et al. (2018); Errington et al. (2021)

Preprints not yet included in the figure:

- Estimating the replicability of Brazilian biomedical science

- A retrospective analysis of 400 publications reveals patterns of irreproducibility across an entire life sciences research field

To read:

- Brodeur, Mikola, and Cook (2024)